Terraforming Azure 1.

Table of Contents

The Beginning of the Project #

In this article series, I will document the development of my latest project. This will likely be part technical guide, part project diary.

To set the premise, as luck would have it, in my current role as an automation engineer/cloud architect, I have been tasked with (re)architecting the complete Azure deployment of my company. This is almost a “greenfield” project, as the company has been using Azure for a while, but the deployment was not well-architected, having been deployed in a “fire and forget” fashion, mostly manually. (The company is mostly on-prem based and lacks cloud/DevOps expertise, so this is a bit of a challenge, given that I am the only person with experience in these areas. But with half a decade of IaC and Cloud experience under my belt, I am up to the challenge!)

My defined goals are:

- Manage the deployment via Terraform (well, almost entirely)

- The company requested to use the Azure Landing Zones / CAF to guide the deployment. The idea is not to follow this religiously but to comply with it as much as possible.

- Additionally, since the company uses SonicWall as an on-prem firewall, management asked me to use this (instead of the Azure-native options) as a virtual appliance to connect on-prem and cloud.

- As a general principle, “cloud-smart” and “cost-efficient” are high on the priority list.

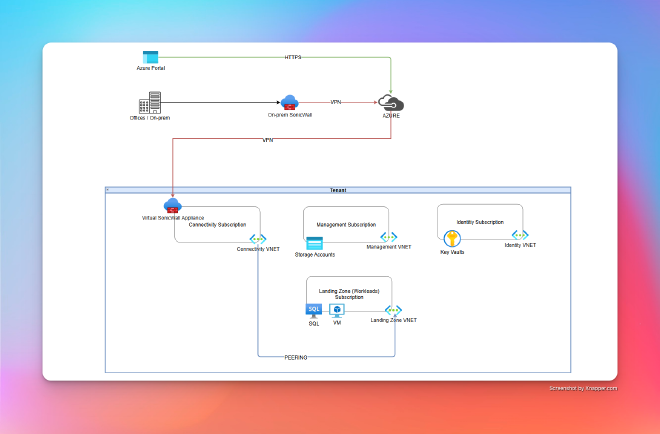

The Overall Design #

At a very high level, this represents my “battle plan.”

Notables:

- The firewalls are not within my scope (I am only responsible for deploying the appliance, and the network team is in charge of the VPN).

The subscriptions are:

Connectivity- This is the subscription where the firewall is deployed, and where the VPN is configured.Landing Zone- This is the subscription hosting the main infrastructure; currently focused on one application, but the idea is to have multiple landing zones, each with its own application.Management- This is the subscription where the management resources are deployed (for now, these are mostly state file storage).Identity- This subscription is currently only for Key Vaults, used to store passwords and keys to VMs.

The Challenge of Multi-Subscription #

As mentioned above, we opted to use one tenant with multiple subscriptions. This means I have to declare a number of providers with aliases (I recommend reading this great write-up on the subject). In our case, since the deployment is relatively small—only four subscriptions are being used for now—it is manageable. However, I imagine with hundreds of subscriptions, this will be a bit more challenging… but that is a challenge for another day.

For now, here is my providers.tf file:

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "~>4.18.0"

}

}

}

variable "con_subscription_id" {

type = string

description = "ID for the connectivity subscription"

default = "<subscription ID>"

}

variable "mgmt_subscription_id" {

type = string

description = "ID for the management subscription"

default = "<subscription ID>"

}

variable "id_subscription_id" {

type = string

description = "ID for the identity subscription"

default = "<subscription ID>"

}

variable "landingzone_empirics_subscription_id" {

type = string

description = "ID for the Empirics landing zone subscription"

default = "<subscription ID>"

}

provider "azurerm" {

alias = "connectivity"

subscription_id = var.con_subscription_id

features {}

use_msi = false

}

provider "azurerm" {

alias = "management"

subscription_id = var.mgmt_subscription_id

features {}

use_msi = false

}

provider "azurerm" {

alias = "identity"

subscription_id = var.id_subscription_id

features {}

use_msi = false

}

provider "azurerm" {

alias = "lz_empirics"

subscription_id = var.landingzone_empirics_subscription_id

features {}

use_msi = false

}

With this setup, I can then set the provider for individual resources, like so:

resource "azurerm_public_ip" "bastion_pip" {

provider = azurerm.connectivity

# other parameters

}

Or for more complex modules, you can specify the provider as follows:

module "empirics_test_vms" {

providers = {

azurerm = azurerm.lz_empirics

}

source = "../../modules/terraform-azurerm-windows-vms"

# other parameters

}

The Backend #

Another challenge to address is managing the backend for the Terraform state.

There are several ways to manage this (please refer to the official documentation for more details). In my case, since I work on Azure in this project, I opted to use Azure Blob Storage as the backend.

terraform {

backend "azurerm" {

resource_group_name = "rg-state-03-core-prod-ne"

storage_account_name = "ststate03coreprodne"

container_name = "stct-state-03-core-prod-ne"

key = "terraform.state_03_core"

subscription_id = "<subscription ID>"

}

}

resource "azurerm_resource_group" "state_03_core" {

provider = azurerm.management

name = "rg-state-03-core-prod-ne"

location = "northeurope"

tags = merge(var.tags, {

CreationTimeUTC = timestamp(),

Environment = "CORE"

})

lifecycle {

ignore_changes = [

tags["CreationTimeUTC"]

]

}

}

resource "azurerm_storage_account" "state_03_core" {

provider = azurerm.management

name = "ststate03coreprodne"

resource_group_name = azurerm_resource_group.state_03_core.name

location = azurerm_resource_group.state_03_core.location

account_tier = "Standard"

account_replication_type = "ZRS"

allow_nested_items_to_be_public = false

public_network_access_enabled = false

min_tls_version = "TLS1_2"

depends_on = [azurerm_resource_group.state_03_core]

tags = merge(var.tags, {

CreationTimeUTC = timestamp(),

Environment = "CORE"

})

lifecycle {

ignore_changes = [tags["CreationTimeUTC"]]

}

}

resource "azurerm_storage_container" "state_03_core" {

provider = azurerm.management

name = "stct-state-03-core-prod-ne"

storage_account_id = azurerm_storage_account.state_03_core.id

depends_on = [azurerm_storage_account.state_03_core, azurerm_resource_group.state_03_core]

}

output "backend_resource_group_name" {

value = "State file RESOURCE GROUP: ${azurerm_resource_group.state_03_core.name}"

}

output "backend_storage_account_name" {

value = "State file STORAGE ACCOUNT: ${azurerm_storage_account.state_03_core.name}"

}

output "backend_storage_container_name" {

value = "State file STORAGE CONTAINER: ${azurerm_storage_container.state_03_core.name}"

}

Now, here are a few things to note:

- I usually set this up like so:

- First, I comment out the

backendblock in theterraformblock. - Then, I run

terraform init/terraform plan/terraform applyto create the backend resources. - At this point, I am using a local backend briefly.

- Then, when the resources are deployed, I uncomment the

backendblock and set the values to the ones from theterraform outputcommand. - Finally, I run

terraform init --migrate-stateto migrate the state to the new backend.

- First, I comment out the

- There are other ways to do this, such as using Azure CLI, PowerShell, or even the Azure Portal, but I like the peaceful life…

Code #

To be continued #